oneVPL¶

The oneAPI Video Processing Library is a programming interface for video decoding, encoding, and processing to build portable media pipelines on CPU’s, GPU’s, and other accelerators. It provides API primitives for zero-copy buffer sharing, device discovery and selection in media centric and video analytics workloads. oneVPL’s backwards and cross-architecture compatibility ensures optimal execution on current and next generation hardware without source code changes.

See oneVPL API Reference for the detailed API description.

oneVPL for Intel® Media Software Development Kit Users¶

oneVPL is source compatible with Intel® Media Software Development Kit (MSDK), allowing applications to use MSDK to target older hardware and oneVPL to target everthing else. oneVPL offers improved usability over MSDK. Some obsolete features of MSDK have been omitted from oneVPL.

oneVPL Usability Enhancements¶

Smart dispatcher with implementations capabilities discovery. Explore SDK Session for more details.

Simplified decoder initialization. Expore Decoding Procedures for more details.

New memory management and components (session) interoperability. Explore Internal memory managment and Decoding Procedures for more details.

Improved internal threading and internal task scheduling.

Obsolete MSDK Features omitted from oneVPL¶

The following MSDKt features are not included in oneVPL:

- Audio Support

oneVPL is for video processing, and removes audio APIs that duplicate functionality from other audio libraries like Sound Open Firmware

- ENC and PAK interfaces

Available as part of Flexible Encode Infrastructure (FEI) and plugin interfaces. FEI is the Intel Graphic specific feature designed for AVC and HEVC encoders, not widely used by customers.

- User plugins architecture

oneVPL enables robust video acceleration through API implementations of many different video processing frameworks, making support of its own user plugin framework obsolete.

- External Buffer memory managment

A set of callback functions to replace internal memory allocation is obsolete.

- Video Processing extended runtime functionality

Video processing function MFXVideoVPP_RunFrameVPPAsyncEx is used for plugins only and is obsolete.

- External threading

New threading model makes MFXDoWork function obsolete

The following behaviors occur when attempting to use a MSDK API that is not supported by oneVPL:

- Code compilation

Code compiled with the oneVPL API headers will generate a compile and/or link error when attempting to use a removed API.

- Code previously compiled with MSDK and used with a oneVPL runtime

Code previously compiled with MSDK and executing using a oneVPL runtime will generate an

MFX_ERR_UNSUPPORTEDerror when calling a removed function.

MSDK API’s not present in oneVPL¶

Audio related functions:

MFXAudioCORE_SyncOperation(mfxSession session, mfxSyncPoint syncp, mfxU32 wait)

MFXAudioDECODE_Close(mfxSession session)

MFXAudioDECODE_DecodeFrameAsync(mfxSession session, mfxBitstream *bs,

mfxAudioFrame *frame_out, mfxSyncPoint *syncp)

MFXAudioDECODE_DecodeHeader(mfxSession session, mfxBitstream *bs, mfxAudioParam *par)

MFXAudioDECODE_GetAudioParam(mfxSession session, mfxAudioParam *par)

MFXAudioDECODE_Init(mfxSession session, mfxAudioParam *par)

MFXAudioDECODE_Query(mfxSession session, mfxAudioParam *in, mfxAudioParam *out)

MFXAudioDECODE_QueryIOSize(mfxSession session, mfxAudioParam *par, mfxAudioAllocRequest *request)

MFXAudioDECODE_Reset(mfxSession session, mfxAudioParam *par)

MFXAudioENCODE_Close(mfxSession session)

MFXAudioENCODE_EncodeFrameAsync(mfxSession session, mfxAudioFrame *frame,

mfxBitstream *buffer_out, mfxSyncPoint *syncp)

MFXAudioENCODE_GetAudioParam(mfxSession session, mfxAudioParam *par)

MFXAudioENCODE_Init(mfxSession session, mfxAudioParam *par)

MFXAudioENCODE_Query(mfxSession session, mfxAudioParam *in, mfxAudioParam *out)

MFXAudioENCODE_QueryIOSize(mfxSession session, mfxAudioParam *par, mfxAudioAllocRequest *request)

MFXAudioENCODE_Reset(mfxSession session, mfxAudioParam *par)

Flexible encode infrastructure functions:

MFXVideoENC_Close(mfxSession session)

MFXVideoENC_GetVideoParam(mfxSession session, mfxVideoParam *par)

MFXVideoENC_Init(mfxSession session, mfxVideoParam *par)

MFXVideoENC_ProcessFrameAsync (mfxSession session, mfxENCInput *in,

mfxENCOutput *out, mfxSyncPoint *syncp)

MFXVideoENC_Query(mfxSession session, mfxVideoParam *in, mfxVideoParam *out)

MFXVideoENC_QueryIOSurf(mfxSession session, mfxVideoParam *par,

mfxFrameAllocRequest *request)

MFXVideoENC_Reset(mfxSession session, mfxVideoParam *par)

MFXVideoPAK_Close(mfxSession session)

MFXVideoPAK_GetVideoParam(mfxSession session, mfxVideoParam *par)

MFXVideoPAK_Init(mfxSession session, mfxVideoParam *par)

MFXVideoPAK_ProcessFrameAsync(mfxSession session, mfxPAKInput *in,

mfxPAKOutput *out, mfxSyncPoint *syncp)

MFXVideoPAK_Query(mfxSession session, mfxVideoParam *in, mfxVideoParam *out)

MFXVideoPAK_QueryIOSurf(mfxSession session, mfxVideoParam *par,

mfxFrameAllocRequest *request)

MFXVideoPAK_Reset(mfxSession session, mfxVideoParam *par)

User Plugin functions:

MFXAudioUSER_ProcessFrameAsync(mfxSession session, const mfxHDL *in,

mfxU32 in_num, const mfxHDL *out,

mfxU32 out_num, mfxSyncPointx *syncp)

MFXAudioUSER_Register(mfxSession session, mfxU32 type, const mfxPlugin *par)

MFXAudioUSER_Unregister(mfxSession session, mfxU32 type)

MFXVideoUSER_GetPlugin(mfxSession session, mfxU32 type, mfxPlugin *par)

MFXVideoUSER_ProcessFrameAsync(mfxSession session, const mfxHDL *in, mfxU32 in_num,

const mfxHDL *out, mfxU32 out_num, mfxSyncPoint *syncp)

MFXVideoUSER_Register(mfxSession session, mfxU32 type, const mfxPlugin *par)

MFXVideoUSER_Unregister(mfxSession session, mfxU32 type)

MFXVideoUSER_Load(mfxSession session, const mfxPluginUID *uid, mfxU32 version)

MFXVideoUSER_LoadByPath(mfxSession session, const mfxPluginUID *uid, mfxU32 version,

const mfxChar *path, mfxU32 len)

MFXVideoUSER_UnLoad(mfxSession session, const mfxPluginUID *uid)

MFXDoWork(mfxSession session)

Memory functions:

MFXVideoCORE_SetBufferAllocator(mfxSession session, mfxBufferAllocator *allocator)

Video processing functions:

MFXVideoVPP_RunFrameVPPAsyncEx(mfxSession session, mfxFrameSurface1 *in,

mfxFrameSurface1 *surface_work, mfxFrameSurface1 **surface_out,

mfxSyncPoint *syncp)

Important

Corresponding extension buffers are also removed.

oneVPL API’s not present in MSDK¶

oneVPL dispatcher functions:

Memory management functions:

Implementation capabilities retrieval functions:

oneVPL API versioning¶

As a successor of MSDKt oneVPL API version starts from 2.0.

Experimental API’s in oneVPL are protected with the following macro:

#if (MFX_VERSION >= MFX_VERSION_NEXT)

To use the API, define the MFX_VERSION_USE_LATEST macro.

Acronyms and Abbreviations¶

Acronyms / Abbreviations |

Meaning |

|---|---|

API |

Application Programming Interface |

AVC |

Advanced Video Codec (same as H.264 and MPEG-4, part 10) |

Direct3D |

Microsoft* Direct3D* version 9 or 11.1 |

Direct3D9 |

Microsoft* Direct3D* version 9 |

Direct3D11 |

Microsoft* Direct3D* version 11.1 |

DRM |

Digital Right Management |

DXVA2 |

Microsoft DirectX* Video Acceleration standard 2.0 |

H.264 |

ISO*/IEC* 14496-10 and ITU-T* H.264, MPEG-4 Part 10, Advanced Video Coding, May 2005 |

HRD |

Hypothetical Reference Decoder |

IDR |

Instantaneous decoding fresh picture, a term used in the H.264 specification |

LA |

Look Ahead. Special encoding mode where encoder performs pre analysis of several frames before actual encoding starts. |

MPEG |

Motion Picture Expert Group |

MPEG-2 |

ISO/IEC 13818-2 and ITU-T H.262, MPEG-2 Part 2, Information Technology- Generic Coding of Moving Pictures and Associate Audio Information: Video, 2000 |

NAL |

Network Abstraction Layer |

NV12 |

A color format for raw video frames |

PPS |

Picture Parameter Set |

QP |

Quantization Parameter |

RGB3 |

Twenty-four-bit RGB color format. Also known as RGB24 |

RGB4 |

Thirty-two-bit RGB color format. Also known as RGB32 |

SDK |

Intel® Media Software Development Kit – SDK |

SEI |

Supplemental Enhancement Information |

SPS |

Sequence Parameter Set |

VA API |

Video Acceleration API |

VBR |

Variable Bit Rate |

VBV |

Video Buffering Verifier |

VC-1 |

SMPTE* 421M, SMPTE Standard for Television: VC-1 Compressed Video Bitstream Format and Decoding Process, August 2005 |

video memory |

memory used by hardware acceleration device, also known as GPU, to hold frame and other types of video data |

VPP |

Video Processing |

VUI |

Video Usability Information |

YUY2 |

A color format for raw video frames |

YV12 |

A color format for raw video frames, Similar to IYUV with U and V reversed |

IYUV |

A color format for raw video frames, also known as I420 |

P010 |

A color format for raw video frames, extends NV12 for 10 bit |

I010 |

A color format for raw video frames, extends IYUV/I420 for 10 bit |

GPB |

Generalized P/B picture. B-picture, containing only forward references in both L0 and L1 |

HDR |

High Dynamic Range |

BRC |

Bit Rate Control |

MCTF |

Motion Compensated Temporal Filter. Special type of a noise reduction filter which utilizes motion to improve efficiency of video denoising |

iGPU/iGfx |

Integrated Intel® HD Graphics |

dGPU/dGfx |

Discrete Intel® Graphics |

Architecture¶

SDK functions fall into the following categories:

Category |

Description |

|---|---|

DECODE |

Decode compressed video streams into raw video frames |

ENCODE |

Encode raw video frames into compressed bitstreams |

VPP |

Perform video processing on raw video frames |

CORE |

Auxiliary functions for synchronization |

Misc |

Global auxiliary functions |

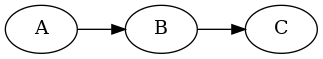

With the exception of the global auxiliary functions, SDK functions are named after their functioning domain and category, as illustrated below. Here, SDK only exposes video domain functions.

Applications use SDK functions by linking with the SDK dispatcher library, as illustrated below. The dispatcher library identifies the hardware acceleration device on the running platform, determines the most suitable platform library, and then redirects function calls. If the dispatcher is unable to detect any suitable platform-specific hardware, the dispatcher redirects SDK function calls to the default software library.

![digraph {

rankdir=TB;

Application [shape=record label="Application" ];

Sdk [shape=record label="SDK Dispatcher Library"];

Lib1 [shape=record label="SDK Library 1 (CPU)"];

Lib2 [shape=record label="SDK Library 2 (Platform 1)"];

Lib3 [shape=record label="SDK Library 3 (Platform 2)"];

Application->Sdk;

Sdk->Lib1;

Sdk->Lib2;

Sdk->Lib3;

}](../../../_images/graphviz-2cdecb74b47d7ca11a94c95086833111768baaa4.png)

Video Decoding¶

The DECODE class of functions takes a compressed bitstream as input and converts it to raw frames as output.

DECODE processes only pure or elementary video streams. The library cannot process bitstreams that reside in a container format, such as MP4 or MPEG. The application must first de-multiplex the bitstreams. De-multiplexing extracts pure video streams out of the container format. The application can provide the input bitstream as one complete frame of data, less than one frame (a partial frame), or multiple frames. If only a partial frame is provided, DECODE internally constructs one frame of data before decoding it.

The time stamp of a bitstream buffer must be accurate to the first byte of the frame data. That is, the first byte of a video coding layer NAL unit for H.264, or picture header for MPEG-2 and VC-1. DECODE passes the time stamp to the output surface for audio and video multiplexing or synchronization.

Decoding the first frame is a special case, since DECODE does not provide enough configuration parameters to correctly process the bitstream. DECODE searches for the sequence header (a sequence parameter set in H.264, or a sequence header in MPEG-2 and VC-1) that contains the video configuration parameters used to encode subsequent video frames. The decoder skips any bitstream prior to that sequence header. In the case of multiple sequence headers in the bitstream, DECODE adopts the new configuration parameters, ensuring proper decoding of subsequent frames.

DECODE supports repositioning of the bitstream at any time during decoding. Because there is no way to obtain the correct sequence header associated with the specified bitstream position after a position change, the application must supply DECODE with a sequence header before the decoder can process the next frame at the new position. If the sequence header required to correctly decode the bitstream at the new position is not provided by the application, DECODE treats the new location as a new “first frame” and follows the procedure for decoding first frames.

Video Encoding¶

The ENCODE class of functions takes raw frames as input and compresses them into a bitstream.

Input frames usually come encoded in a repeated pattern called the Group of Picture (GOP) sequence. For example, a GOP sequence can start from an I-frame, followed by a few B-frames, a P-frame, and so on. ENCODE uses an MPEG-2 style GOP sequence structure that can specify the length of the sequence and the distance between two key frames: I- or P-frames. A GOP sequence ensures that the segments of a bitstream do not completely depend upon each other. It also enables decoding applications to reposition the bitstream.

ENCODE processes input frames in two ways:

Display order: ENCODE receives input frames in the display order. A few GOP structure parameters specify the GOP sequence during ENCODE initialization. Scene change results from the video processing stage of a pipeline can alter the GOP sequence.

Encoded order: ENCODE receives input frames in their encoding order. The application must specify the exact input frame type for encoding. ENCODE references GOP parameters to determine when to insert information such as an end-of-sequence into the bitstream.

An ENCODE output consists of one frame of a bitstream with the time stamp passed from the input frame. The time stamp is used for multiplexing subsequent video with other associated data such as audio. The SDK library provides only pure video stream encoding. The application must provide its own multiplexing.

ENCODE supports the following bitrate control algorithms: constant bitrate, variable bitrate (VBR), and constant Quantization Parameter (QP). In the constant bitrate mode, ENCODE performs stuffing when the size of the least-compressed frame is smaller than what is required to meet the Hypothetical Reference Decoder (HRD) buffer (or VBR) requirements. (Stuffing is a process that appends zeros to the end of encoded frames.)

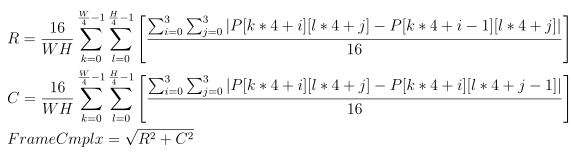

Video Processing¶

Video processing (VPP) takes raw frames as input and provides raw frames as output.

![digraph {

rankdir=LR;

F1 [shape=record label="Function 1" ];

F2 [shape=record label="Function 2"];

F3 [shape=record label="Additional Filters"];

F4 [shape=record label="Function N-1" ];

F5 [shape=record label="Function N"];

F1->F2->F3->F4->F5;

}](../../../_images/graphviz-b9f074540efc2209ea06cc6b39cc7bed719c402d.png)

The actual conversion process is a chain operation with many single-function filters, as Figure 3 illustrates. The application specifies the input and output format, and the SDK configures the pipeline accordingly. The application can also attach one or more hint structures to configure individual filters or turn them on and off. Unless specifically instructed, the SDK builds the pipeline in a way that best utilizes hardware acceleration or generates the best video processing quality.

Table 1 shows the SDK video processing features. The application can configure supported video processing features through the video processing I/O parameters. The application can also configure optional features through hints. See “Video Processing procedure / Configuration” for more details on how to configure optional filters.

Todo

create link to “Video Processing procedure / Configuration”

Video Processing Features |

Configuration |

|---|---|

Convert color format from input to output |

I/O parameters |

De-interlace to produce progressive frames at the output |

I/O parameters |

Crop and resize the input frames |

I/O parameters |

Convert input frame rate to match the output |

I/O parameters |

Perform inverse telecine operations |

I/O parameters |

Fields weaving |

I/O parameters |

Fields splitting |

I/O parameters |

Remove noise |

hint (optional feature) |

Enhance picture details/edges |

hint (optional feature) |

Adjust the brightness, contrast, saturation, and hue settings |

hint (optional feature) |

Perform image stabilization |

hint (optional feature) |

Convert input frame rate to match the output, based on frame interpolation |

hint (optional feature) |

Perform detection of picture structure |

hint (optional feature) |

Color Conversion Support:

Output Color> |

||||||

|---|---|---|---|---|---|---|

Input Color |

NV12 |

RGB32 |

P010 |

P210 |

NV16 |

A2RGB10 |

RGB4 (RGB32) |

X (limited) |

X (limited) |

||||

NV12 |

X |

X |

X |

X |

||

YV12 |

X |

X |

||||

UYVY |

X |

|||||

YUY2 |

X |

X |

||||

P010 |

X |

X |

X |

X |

||

P210 |

X |

X |

X |

X |

X |

|

NV16 |

X |

X |

X |

Note

‘X’ indicates a supported function.

Note

The SDK video processing pipeline supports limited functionality for RGB4 input. Only filters that are

required to convert input format to output one are included in pipeline. All optional filters are skipped.

See description of MFX_WRN_FILTER_SKIPPED warning in mfxStatus enum for more details on how to retrieve list of active filters.

Deinterlacing/Inverse Telecine Support in VPP:

Input Field Rate (fps) Interlaced |

Output Frame Rate (fps) Progressive |

||||||

23.976 |

25 |

29.97 |

30 |

50 |

59.94 |

60 |

|

29.97 |

Inverse Telecine |

X |

|||||

50 |

X |

X |

|||||

59.94 |

X |

X |

|||||

60 |

X |

X |

|||||

Note

‘X’ indicates a supported function.

This table describes pure deinterlacing algorithm. The application can combine it with frame rate conversion to achieve any desirable input/output frame rate ratio. Note, that in this table input rate is field rate, i.e. number of video fields in one second of video. The SDK uses frame rate in all configuration parameters, so this input field rate should be divided by two during the SDK configuration. For example, 60i to 60p conversion in this table is represented by right bottom cell. It should be described in mfxVideoParam as input frame rate equal to 30 and output 60.

SDK support two HW-accelerated deinterlacing algorithms: BOB DI (in Linux’s libVA terms VAProcDeinterlacingBob) and Advanced DI (VAProcDeinterlacingMotionAdaptive). Default is ADI (Advanced DI) which uses reference frames and has better quality. BOB DI is faster than ADI mode. So user can select as usual between speed and quality.

User can exactly configure DI modes via mfxExtVPPDeinterlacing.

There is one special mode of deinterlacing available in combination with frame rate conversion. If VPP input frame is interlaced (TFF or BFF) and output is progressive and ratio between source frame rate and destination frame rate is ½ (for example 30 to 60, 29.97 to 59.94, 25 to 50), special mode of VPP turned on: for 30 interlaced input frames application will get 60 different progressive output frames

Color formats supported by VPP filters:

Color> |

|||||||

|---|---|---|---|---|---|---|---|

Filter |

RGB4 (RGB32) |

NV12 |

YV12 |

YUY2 |

P010 |

P210 |

NV1 |

Denoise |

X |

||||||

MCTF |

X |

||||||

Deinterlace |

X |

||||||

Image stabilization |

X |

||||||

Frame rate conversion |

X |

||||||

Resize |

X |

X |

X |

X |

|||

Detail |

X |

||||||

Color conversion |

X |

X |

X |

X |

X |

X |

X |

Composition |

X |

X |

|||||

Field copy |

X |

||||||

Fields weaving |

X |

||||||

Fields splitting |

X |

Note

‘X’ indicates a supported function.

Note

The SDK video processing pipeline supports limited HW acceleration for P010 format - zeroed mfxFrameInfo::Shift leads to partial acceleration.

Todo

create link to mfxFrameInfo::Shift

Note

The SDK video processing pipeline does not support HW acceleration for P210 format.

Todo

Keep or remove HW?

Programming Guide¶

This chapter describes the concepts used in programming the SDK.

The application must use the include file, mfxvideo.h for C/C++ programming) and link the SDK dispatcher library, libmfx.so.

Include these files:

#include "mfxvideo.h" /* The SDK include file */

Link this library:

libmfx.so /* The SDK dynamic dispatcher library (Linux)*/

Status Codes¶

The SDK functions organize into classes for easy reference. The classes include ENCODE (encoding functions), DECODE (decoding functions), and VPP (video processing functions).

Init, Reset and Close are member functions within the ENCODE, DECODE and VPP classes that initialize, restart and de-initialize specific operations defined for the class. Call all other member functions within a given class (except Query and QueryIOSurf) within the Init … Reset (optional) … Close sequence.

The Init and Reset member functions both set up necessary internal structures for media processing. The difference between the two is that the Init functions allocate memory while the Reset functions only reuse allocated internal memory. Therefore, Reset can fail if the SDK needs to allocate additional memory. Reset functions can also fine-tune ENCODE and VPP parameters during those processes or reposition a bitstream during DECODE.

All SDK functions return status codes to indicate whether an operation succeeded or failed. See the mfxStatus enumerator

for all defined status codes. The status code MFX_ERR_NONE indicates that the function successfully completed its operation.

Status codes are less than MFX_ERR_NONE for all errors and greater than MFX_ERR_NONE for all warnings.

If an SDK function returns a warning, it has sufficiently completed its operation, although the output of the function might not be strictly reliable. The application must check the validity of the output generated by the function.

If an SDK function returns an error (except MFX_ERR_MORE_DATA or MFX_ERR_MORE_SURFACE or

MFX_ERR_MORE_BITSTREAM), the function

aborts the operation. The application must call either the Reset function to put the class back to a clean state, or the Close

function to terminate the operation. The behavior is undefined if the application continues to call any class member functions

without a Reset or Close. To avoid memory leaks, always call the Close function after Init.

SDK Session¶

Before calling any SDK functions, the application must initialize the SDK library and create an SDK session. An SDK session maintains context for the use of any of DECODE, ENCODE, or VPP functions.

Media SDK dispatcher (legacy)¶

The function MFXInit() starts (initializes) an SDK session. MFXClose() closes (de-initializes) the SDK session.

To avoid memory leaks, always call MFXClose() after MFXInit().

The application can initialize a session as a software-based session (MFX_IMPL_SOFTWARE) or a hardware-based session

(MFX_IMPL_HARDWARE). In the former case, the SDK functions execute on a CPU, and in the latter case, the SDK functions

use platform acceleration capabilities. For platforms that expose multiple graphic devices, the application can initialize

the SDK session on any alternative graphic device (MFX_IMPL_HARDWARE1,…, MFX_IMPL_HARDWARE4).

The application can also initialize a session to be automatic (MFX_IMPL_AUTO or MFX_IMPL_AUTO_ANY), instructing

the dispatcher library to detect the platform capabilities and choose the best SDK library available. After initialization,

the SDK returns the actual implementation through the MFXQueryIMPL() function.

Internally, dispatcher works in that way:

It seaches for the shared library with the specific name:

OS

Name

Description

Linux

libmfxsw64.so.1

64-bit software-based implementation

Linux

libmfxsw32.so.1

32-bit software-based implementation

Linux

libmfxhw64.so.1

64-bit hardware-based implementation

Linux

libmfxhw64.so.1

32-bit hardware-based implementation

Windows

libmfxsw32.dll

64-bit software-based implementation

Windows

libmfxsw32.dll

32-bit software-based implementation

Windows

libmfxhw64.dll

64-bit hardware-based implementation

Windows

libmfxhw64.dll

32-bit hardware-based implementation

Once library is loaded, dispatcher obtains addresses of an each SDK function. See table with the list of functions to export.

oneVPL diapatcher¶

oneVPL dispatcher extends the legacy dispatcher by providing additional ability to select appropriate implementation based on the implementation capabilities. Implementation capabilities include information about supported decoders, encoders and VPP filters. For each supported encoder, decoder and filter, capabilities include information about supported memory types, color formats, image (frame) size in pixels and so on.

This is recomended way for the user to configure the dispatcher’s capabilities search filters and create session based on suitable implementation:

Create loader (

MFXLoad()dispatcher’s function).Create loader’s config (

MFXCreateConfig()dispatcher’s function).Add config properties (

MFXSetConfigFilterProperty()dispatcher’s function).Explore avialable implementations according (

MFXEnumImplementations()dispatcher’s function).Create suitable session (

MFXCreateSession()dispatcher’s function).

This is application termination procedure:

Destroys session (

MFXClose()function).Destroys loader (

MFXUnload()dispatcher’s function).

Note

Multiple loader instances can be created.

Note

Each loader may have multiple config objects assotiated with it.

Important

One config object can handle only one filter property.

Note

Multiple sessions can be created by using one loader object.

When dispatcfher searches for the implementation it uses following priority rules:

HW implementation has priority over SW implementation.

Gen HW implementation hase priority over VSI HW implementation.

Highest API version has higher priority over lower API version.

Note

Implementation has priority over the API version. In other words, dispatcher must return implementation with highest API priority (greater or equal to the requested).

Dispatcher searches implemetation in the following folders at runtime (in priority order):

User-defined search folders.

oneVPL package.

Standalone MSDK package (or driver).

User has ability to develop it’s own implementation and guide oneVPL dispatcher to load his implementation by providing list of search folders. The way how it can be done depends on OS.

linux: User can provide colon separated list of folders in ONEVPL_SEARCH_PATH environmental variable.

Windows: User can provide semicolon separated list of folders in ONEVPL_SEARCH_PATH environmental variable. Alternatively, user can use Windows registry.

Different SW implementations is supported by the dispatcher. User can use field mfxImplDescription::VendorID or

mfxImplDescription::VendorImplID or mfxImplDescription::ImplName to search for the particular implementation.

Internally, dispatcher works in that way:

Dispatcher loads any shared library with in given search floders.

For each loaded library, dispatcher tries to resolve adress of the

MFXQueryImplCapabilities()function to collect the implamentation;s capabilities.Once user requested to create the session based on this implementation, dispatcher obtains addresses of an each SDK function. See table with the list of functions to export.

This table summarizes list of evviromental variables to control the dispatcher behaviour:

Varible |

Purpose |

|---|---|

ONEVPL_SEARCH_PATH |

List of user-defined search folders. |

Note

Each implementation must support both dispatchers for backward compatibility with existing applications.

Multiple Sessions¶

Each SDK session can run exactly one instance of DECODE, ENCODE and VPP functions. This is good for a simple transcoding operation. If the application needs more than one instance of DECODE, ENCODE and VPP in a complex transcoding setting, or needs more simultaneous transcoding operations to balance CPU/GPU workloads, the application can initialize multiple SDK sessions. Each SDK session can independently be a software-based session or hardware-based session.

The application can use multiple SDK sessions independently or run a “joined” session. Independently operated SDK sessions cannot share data unless the application explicitly synchronizes session operations (to ensure that data is valid and complete before passing from the source to the destination session.)

To join two sessions together, the application can use the function MFXJoinSession(). Alternatively, the application can use

the function MFXCloneSession() to duplicate an existing session. Joined SDK sessions work together as a single session, sharing

all session resources, threading control and prioritization operations (except hardware acceleration devices and external

allocators). When joined, one of the sessions (the first join) serves as a parent session, scheduling execution resources,

with all others child sessions relying on the parent session for resource management.

With joined sessions, the application can set the priority of session operations through the MFXSetPriority() function.

A lower priority session receives less CPU cycles. Session priority does not affect hardware accelerated processing.

After the completion of all session operations, the application can use the function MFXDisjoinSession() to remove

the joined state of a session. Do not close the parent session until all child sessions are disjoined or closed.

Frame and Fields¶

In SDK terminology, a frame (or frame surface, interchangeably) contains either a progressive frame or a complementary field pair. If the frame is a complementary field pair, the odd lines of the surface buffer store the top fields and the even lines of the surface buffer store the bottom fields.

Frame Surface Locking¶

During encoding, decoding or video processing, cases arise that require reserving input or output frames for future use. In the case of decoding, for example, a frame that is ready for output must remain as a reference frame until the current sequence pattern ends. The usual approach is to cache the frames internally. This method requires a copy operation, which can significantly reduce performance.

SDK functions define a frame-locking mechanism to avoid the need for copy operations. This mechanism is as follows:

The application allocates a pool of frame surfaces large enough to include SDK function I/O frame surfaces and internal cache needs. Each frame surface maintains a Locked counter, part of the mfxFrameData structure. Initially, the Locked counter is set to zero.

The application calls an SDK function with frame surfaces from the pool, whose Locked counter is set as appropriate: for decoding or video processing operations where the SDK uses the surfaces to write it should be equal to zero. If the SDK function needs to reserve any frame surface, the SDK function increases the Locked counter of the frame surface. A non-zero Locked counter indicates that the calling application must treat the frame surface as “in use.” That is, the application can read, but cannot alter, move, delete or free the frame surface.

In subsequent SDK executions, if the frame surface is no longer in use, the SDK decreases the Locked counter. When the Locked counter reaches zero, the application is free to do as it wishes with the frame surface.

In general, the application must not increase or decrease the Locked counter, since the SDK manages this field. If, for some reason, the application needs to modify the Locked counter, the operation must be atomic to avoid race condition.

Attention

Modifying the Locked counter is not recommended.

Starting from API version 2.0 mfxFrameSurfaceInterface structure as a set of callback functions was introduced for mfxFrameSurface1 to work with frames. This interface defines mfxFrameSurface1 as a reference counted object which can be allocated by the SDK or application. Application has to follow the general rules of operations with reference countend objects. As example, when surfaces are allocated by the SDK during MFXVideoDECODE_DecodeFrameAsync or with help of MFXMemory_GetSurfaceForVPP, MFXMemory_GetSurfaceForEncode, application has to call correspondent mfxFrameSurfaceInterface->(*Release) for the surfaces whose are no longer in use.

Attention

Need to distinguish Locked counter which defines read/write access polices and reference counter responsible for managing frames’ lifetime.

Note

all mfxFrameSurface1 structures starting from mfxFrameSurface1::mfxStructVersion = {1,1} supports mfxFrameSurfaceInterface.

Decoding Procedures¶

Example 1 shows the pseudo code of the decoding procedure. The following describes a few key points:

The application can use the

MFXVideoDECODE_DecodeHeader()function to retrieve decoding initialization parameters from the bitstream. This step is optional if such parameters are retrievable from other sources such as an audio/video splitter.The application uses the

MFXVideoDECODE_QueryIOSurf()function to obtain the number of working frame surfaces required to reorder output frames. This call is optional and required when application uses external allocation.The application calls the

MFXVideoDECODE_DecodeFrameAsync()function for a decoding operation, with the bitstream buffer (bits), and an unlocked working frame surface (work) as input parameters.

Attention

Starting from API version 2.0 application can provide NULL as working frame surface what leads to internal memory allocation.

If decoding output is not available, the function returns a status code requesting additional bitstream input or working frame surfaces as follows:

MFX_ERR_MORE_DATA: The function needs additional bitstream input. The existing buffer contains less than a frame worth of bitstream data.

MFX_ERR_MORE_SURFACE: The function needs one more frame surface to produce any output.

MFX_ERR_REALLOC_SURFACE: Dynamic resolution change case - the function needs bigger working frame surface (work).

Upon successful decoding, the

MFXVideoDECODE_DecodeFrameAsync()function returnsMFX_ERR_NONE. However, the decoded frame data (identified by the disp pointer) is not yet available because theMFXVideoDECODE_DecodeFrameAsync()function is asynchronous. The application has to use theMFXVideoCORE_SyncOperation()ormfxFrameSurfaceInterfaceinterface to synchronize the decoding operation before retrieving the decoded frame data.At the end of the bitstream, the application continuously calls the

MFXVideoDECODE_DecodeFrameAsync()function with a NULL bitstream pointer to drain any remaining frames cached within the SDK decoder, until the function returnsMFX_ERR_MORE_DATA.

Example 2 below demonstrates simplified decoding procedure.

Starting for API version 2.0 new decoding approach has been introduced. For simple use cases, when user just wants to decode some elementary stream and don’t want to set additional parameters, the simplified procedure of Decoder’s initialization has been proposed. For such situations it is possible to skip explicit stages of stream’s header decodeng and Decoder’s initialization and perform it implicitly during decoding of first frame. This change also requires additional field in mfxBitstream object to indicate codec type. In that mode decoder allocates mfxFrameSurface1 internally, so users should set input surface to zero.

Example 1: Decoding Pseudo Code

MFXVideoDECODE_DecodeHeader(session, bitstream, &init_param);

MFXVideoDECODE_QueryIOSurf(session, &init_param, &request);

allocate_pool_of_frame_surfaces(request.NumFrameSuggested);

MFXVideoDECODE_Init(session, &init_param);

sts=MFX_ERR_MORE_DATA;

for (;;) {

if (sts==MFX_ERR_MORE_DATA && !end_of_stream())

append_more_bitstream(bitstream);

find_unlocked_surface_from_the_pool(&work);

bits=(end_of_stream())?NULL:bitstream;

sts=MFXVideoDECODE_DecodeFrameAsync(session,bits,work,&disp,&syncp);

if (sts==MFX_ERR_MORE_SURFACE) continue;

if (end_of_bitstream() && sts==MFX_ERR_MORE_DATA) break;

if (sts==MFX_ERR_REALLOC_SURFACE) {

MFXVideoDECODE_GetVideoParam(session, ¶m);

realloc_surface(work, param.mfx.FrameInfo);

continue;

}

// skipped other error handling

if (sts==MFX_ERR_NONE) {

MFXVideoCORE_SyncOperation(session, syncp, INFINITE);

do_something_with_decoded_frame(disp);

}

}

MFXVideoDECODE_Close();

free_pool_of_frame_surfaces();

Example 2: Simplified decoding procedure

sts=MFX_ERR_MORE_DATA;

for (;;) {

if (sts==MFX_ERR_MORE_DATA && !end_of_stream())

append_more_bitstream(bitstream);

bits=(end_of_stream())?NULL:bitstream;

sts=MFXVideoDECODE_DecodeFrameAsync(session,bits,NULL,&disp,&syncp);

if (sts==MFX_ERR_MORE_SURFACE) continue;

if (end_of_bitstream() && sts==MFX_ERR_MORE_DATA) break;

// skipped other error handling

if (sts==MFX_ERR_NONE) {

MFXVideoCORE_SyncOperation(session, syncp, INFINITE);

do_something_with_decoded_frame(disp);

release_surface(disp);

}

}

Bitstream Repositioning¶

The application can use the following procedure for bitstream reposition during decoding:

Use the

MFXVideoDECODE_Reset()function to reset the SDK decoder.Optionally, if the application maintains a sequence header that decodes correctly the bitstream at the new position, the application may insert the sequence header to the bitstream buffer.

Append the bitstream from the new location to the bitstream buffer.

Resume the decoding procedure. If the sequence header is not inserted in the above steps, the SDK decoder searches for a new sequence header before starting decoding.

Broken Streams Handling¶

Robustness and capability to handle broken input stream is important part of the decoder.

First of all, start code prefix (ITU-T H.264 3.148 and ITU-T H.265 3.142) is used to separate NAL units. Then all syntax elements in bitstream are parsed and verified. If any of elements violate the specification then input bitstream is considered as invalid and decoder tries to re-sync (find next start code). The further decoder’s behavior is depend on which syntax element is broken:

SPS header – return

MFX_ERR_INCOMPATIBLE_VIDEO_PARAM(HEVC decoder only, AVC decoder uses last valid)PPS header – re-sync, use last valid PPS for decoding

Slice header – skip this slice, re-sync

Slice data - Corruption flags are set on output surface

Note

Some requirements are relaxed because there are a lot of streams which violate the letter of standard but can be decoded without errors.

Many streams have IDR frames with frame_num != 0 while specification says that “If the current picture is an IDR picture, frame_num shall be equal to 0.” (ITU-T H.265 7.4.3)

VUI is also validated, but errors doesn’t invalidate the whole SPS, decoder either doesn’t use corrupted VUI (AVC) or resets incorrect values to default (HEVC).

The corruption at reference frame is spread over all inter-coded pictures which use this reference for prediction. To cope with this problem you either have to periodically insert I-frames (intra-coded) or use ‘intra refresh’ technique. The latter allows to recover corruptions within a pre-defined time interval. The main point of ‘intra refresh’ is to insert cyclic intra-coded pattern (usually row) of macroblocks into the inter-coded pictures, restricting motion vectors accordingly. Intra-refresh is often used in combination with Recovery point SEI, where recovery_frame_cnt is derived from intra-refresh interval. Recovery point SEI message is well described at ITU-T H.264 D.2.7 and ITU-T H.265 D.2.8. This message can be used by the decoder to understand from which picture all subsequent (in display order) pictures contain no errors, if we start decoding from AU associated with this SEI message. In opposite to IDR, recovery point message doesn’t mark reference pictures as “unused for reference”.

Besides validation of syntax elements and theirs constrains, decoder also uses various hints to handle broken streams.

If there are no valid slices for current frame – the whole frame is skipped.

The slices which violate slice segment header semantics (ITU-T H.265 7.4.7.1) are skipped. Only slice_temporal_mvp_enabled_flag is checked for now.

Since LTR (Long Term Reference) stays at DPB until it will be explicitly cleared by IDR or MMCO, the incorrect LTR could cause long standing visual artifacts. AVC decoder uses the following approaches to care about this:

When we have DPB overflow in case incorrect MMCO command which marks reference picture as LT, we rollback this operation

An IDR frame with frame_num != 0 can’t be LTR

If decoder detects frame gapping, it inserts ‘fake’ (marked as non-existing) frames, updates FrameNumWrap (ITU-T H.264 8.2.4.1) for reference frames and applies Sliding Window (ITU-T H.264 8.2.5.3) marking process. ‘Fake’ frames are marked as reference, but since they are marked as non-existing they are not really used for inter-prediction.

VP8 Specific Details¶

Unlike other supported by SDK decoders, VP8 can accept only complete frame as input and application should provide it accompanied by

MFX_BITSTREAM_COMPLETE_FRAME flag. This is the single specific difference.

JPEG¶

The application can use the same decoding procedures for JPEG/motion JPEG decoding, as illustrated in pseudo code below:

// optional; retrieve initialization parameters

MFXVideoDECODE_DecodeHeader(...);

// decoder initialization

MFXVideoDECODE_Init(...);

// single frame/picture decoding

MFXVideoDECODE_DecodeFrameAsync(...);

MFXVideoCORE_SyncOperation(...);

// optional; retrieve meta-data

MFXVideoDECODE_GetUserData(...);

// close

MFXVideoDECODE_Close(...);

DECODE supports JPEG baseline profile decoding as follows:

DCT-based process

Source image: 8-bit samples within each component

Sequential

Huffman coding: 2 AC and 2 DC tables

3 loadable quantization matrixes

Interleaved and non-interleaved scans

Single and multiple scans

chroma subsampling ratios:

Chroma 4:0:0 (grey image)

Chroma 4:1:1

Chroma 4:2:0

Chroma horizontal 4:2:2

Chroma vertical 4:2:2

Chroma 4:4:4

3 channels images

The MFXVideoDECODE_Query() function will return MFX_ERR_UNSUPPORTED if the input bitstream contains unsupported features.

For still picture JPEG decoding, the input can be any JPEG bitstreams that conform to the ITU-T* Recommendation T.81, with an EXIF* or JFIF* header. For motion JPEG decoding, the input can be any JPEG bitstreams that conform to the ITU-T Recommendation T.81.

Unlike other SDK decoders, JPEG one supports three different output color formats - NV12, YUY2 and RGB32. This support sometimes requires internal

color conversion and more complicated initialization. The color format of input bitstream is described by JPEGChromaFormat and JPEGColorFormat

fields in mfxInfoMFX structure. The MFXVideoDECODE_DecodeHeader() function usually fills them in. But if JPEG bitstream does not contains color format

information, application should provide it. Output color format is described by general SDK parameters - FourCC and ChromaFormat fields in

mfxFrameInfo structure.

Motion JPEG supports interlaced content by compressing each field (a half-height frame) individually. This behavior is incompatible with the rest SDK transcoding pipeline, where SDK requires that fields be in odd and even lines of the same frame surface.) The decoding procedure is modified as follows:

The application calls the

MFXVideoDECODE_DecodeHeader()function, with the first field JPEG bitstream, to retrieve initialization parameters.The application initializes the SDK JPEG decoder with the following settings:

Set the PicStruct field of the

mfxVideoParamstructure with proper interlaced type,MFX_PICSTRUCT_FIELD_TFForMFX_PICSTRUCT_FIELD_BFF, from motion JPEG header.Double the Height field of the

mfxVideoParamstructure as the value returned by theMFXVideoDECODE_DecodeHeader()function describes only the first field. The actual frame surface should contain both fields.

During decoding, application sends both fields for decoding together in the same

mfxBitstream. Application also should set DataFlag inmfxBitstreamstructure toMFX_BITSTREAM_COMPLETE_FRAME. The SDK decodes both fields and combines them into odd and even lines as in the SDK convention.

SDK supports JPEG picture rotation, in multiple of 90 degrees, as part of the decoding operation. By default, the MFXVideoDECODE_DecodeHeader()

function returns the Rotation parameter so that after rotation, the pixel at the first row and first column is at the top left.

The application can overwrite the default rotation before calling MFXVideoDECODE_Init().

The application may specify Huffman and quantization tables during decoder initialization by attaching mfxExtJPEGQuantTables

and mfxExtJPEGHuffmanTables buffers to mfxVideoParam structure. In this case, decoder ignores tables from bitstream

and uses specified by application. The application can also retrieve these tables by attaching the same buffers to mfxVideoParam and calling

MFXVideoDECODE_GetVideoParam() or MFXVideoDECODE_DecodeHeader() functions.

Multi-view video decoding¶

The SDK MVC decoder operates on complete MVC streams that contain all view/temporal configurations. The application can configure the SDK decoder to generate a subset at the decoding output. To do this, the application needs to understand the stream structure and based on such information configure the SDK decoder for target views.

The decoder initialization procedure is as follows:

The application calls the MFXVideoDECODE_DecodeHeader function to obtain the stream structural information. This is actually done in two sub-steps:

- The application calls the MFXVideoDECODE_DecodeHeader function with the mfxExtMVCSeqDesc structure attached to the mfxVideoParam structure.

Do not allocate memory for the arrays in the mfxExtMVCSeqDesc structure just yet. Set the View, ViewId and OP pointers to NULL and set NumViewAlloc, NumViewIdAlloc and NumOPAlloc to zero. The function parses the bitstream and returns MFX_ERR_NOT_ENOUGH_BUFFER with the correct values NumView, NumViewId and NumOP. This step can be skipped if the application is able to obtain the NumView, NumViewId and NumOP values from other sources.

- The application allocates memory for the View, ViewId and OP arrays and calls the MFXVideoDECODE_DecodeHeader function again.

The function returns the MVC structural information in the allocated arrays.

The application fills the mfxExtMvcTargetViews structure to choose the target views, based on information described in the mfxExtMVCSeqDesc structure.

- The application initializes the SDK decoder using the MFXVideoDECODE_Init function. The application must attach both the mfxExtMVCSeqDesc structure and

the mfxExtMvcTargetViews structure to the mfxVideoParam structure.

In the above steps, do not modify the values of the mfxExtMVCSeqDesc structure after the MFXVideoDECODE_DecodeHeader function, as the SDK decoder uses the values in the structure for internal memory allocation. Once the application configures the SDK decoder, the rest decoding procedure remains unchanged. As illustrated in the pseudo code below, the application calls the MFXVideoDECODE_DecodeFrameAsync function multiple times to obtain all target views of the current frame picture, one target view at a time. The target view is identified by the FrameID field of the mfxFrameInfo structure.

mfxExtBuffer *eb[2];

mfxExtMVCSeqDesc seq_desc;

mfxVideoParam init_param;

init_param.ExtParam=&eb;

init_param.NumExtParam=1;

eb[0]=&seq_desc;

MFXVideoDECODE_DecodeHeader(session, bitstream, &init_param);

/* select views to decode */

mfxExtMvcTargetViews tv;

init_param.NumExtParam=2;

eb[1]=&tv;

/* initialize decoder */

MFXVideoDECODE_Init(session, &init_param);

/* perform decoding */

for (;;) {

MFXVideoDECODE_DecodeFrameAsync(session, bits, work, &disp,

&syncp);

MFXVideoCORE_SyncOperation(session, &syncp, INFINITE);

}

/* close decoder */

MFXVideoDECODE_Close();

Encoding Procedures¶

Encoding procedure¶

There are two ways of allocation and handling in SDK for shared memory: external and internal.

Example below shows the pseudo code of the encoding procedure with external memory (legacy mode).

MFXVideoENCODE_QueryIOSurf(session, &init_param, &request);

allocate_pool_of_frame_surfaces(request.NumFrameSuggested);

MFXVideoENCODE_Init(session, &init_param);

sts=MFX_ERR_MORE_DATA;

for (;;) {

if (sts==MFX_ERR_MORE_DATA && !end_of_stream()) {

find_unlocked_surface_from_the_pool(&surface);

fill_content_for_encoding(surface);

}

surface2=end_of_stream()?NULL:surface;

sts=MFXVideoENCODE_EncodeFrameAsync(session,NULL,surface2,bits,&syncp);

if (end_of_stream() && sts==MFX_ERR_MORE_DATA) break;

// Skipped other error handling

if (sts==MFX_ERR_NONE) {

MFXVideoCORE_SyncOperation(session, syncp, INFINITE);

do_something_with_encoded_bits(bits);

}

}

MFXVideoENCODE_Close();

free_pool_of_frame_surfaces();

The following describes a few key points:

The application uses the MFXVideoENCODE_QueryIOSurf function to obtain the number of working frame surfaces required for reordering input frames.

The application calls the MFXVideoENCODE_EncodeFrameAsync function for the encoding operation. The input frame must be in an unlocked frame surface from the frame surface pool. If the encoding output is not available, the function returns the status code MFX_ERR_MORE_DATA to request additional input frames.

Upon successful encoding, the MFXVideoENCODE_EncodeFrameAsync function returns MFX_ERR_NONE. However, the encoded bitstream is not yet available because the MFXVideoENCODE_EncodeFrameAsync function is asynchronous. The application must use the MFXVideoCORE_SyncOperation function to synchronize the encoding operation before retrieving the encoded bitstream.

At the end of the stream, the application continuously calls the MFXVideoENCODE_EncodeFrameAsync function with NULL surface pointer to drain any remaining bitstreams cached within the SDK encoder, until the function returns MFX_ERR_MORE_DATA.

Note

It is the application’s responsibility to fill pixels outside of crop window when it is smaller than frame to be encoded. Especially in cases when crops are not aligned to minimum coding block size (16 for AVC, 8 for HEVC and VP9).

Another approach is when SDK allocates memory for shared objects internally.

MFXVideoENCODE_Init(session, &init_param);

sts=MFX_ERR_MORE_DATA;

for (;;) {

if (sts==MFX_ERR_MORE_DATA && !end_of_stream()) {

MFXMemory_GetSurfaceForEncode(&surface);

fill_content_for_encoding(surface);

}

surface2=end_of_stream()?NULL:surface;

sts=MFXVideoENCODE_EncodeFrameAsync(session,NULL,surface2,bits,&syncp);

if (surface2) surface->FrameInterface->(*Release)(surface2);

if (end_of_stream() && sts==MFX_ERR_MORE_DATA) break;

// Skipped other error handling

if (sts==MFX_ERR_NONE) {

MFXVideoCORE_SyncOperation(session, syncp, INFINITE);

do_something_with_encoded_bits(bits);

}

}

MFXVideoENCODE_Close();

There are several key points which are different from legacy mode:

The application doesn’t need to call MFXVideoENCODE_QueryIOSurf function to obtain the number of working frame surfaces since allocation is done by SDK

The application calls the MFXMemory_GetSurfaceForEncode function to get free surface for the following encode operation.

The application needs to call the FrameInterface->(*Release) function to decrement reference counter of the obtained surface after MFXVideoENCODE_EncodeFrameAsync call.

Configuration Change¶

The application changes configuration during encoding by calling MFXVideoENCODE_Reset function. Depending on difference in configuration parameters before and after change, the SDK encoder either continues current sequence or starts a new one. If the SDK encoder starts a new sequence it completely resets internal state and begins a new sequence with IDR frame.

The application controls encoder behavior during parameter change by attaching mfxExtEncoderResetOption to mfxVideoParam structure during reset. By using this structure, the application instructs encoder to start or not to start a new sequence after reset. In some cases request to continue current sequence cannot be satisfied and encoder fails during reset. To avoid such cases the application may query reset outcome before actual reset by calling MFXVideoENCODE_Query function with mfxExtEncoderResetOption attached to mfxVideoParam structure.

The application uses the following procedure to change encoding configurations:

The application retrieves any cached frames in the SDK encoder by calling the MFXVideoENCODE_EncodeFrameAsync function with a NULL input frame pointer until the function returns MFX_ERR_MORE_DATA.

Note

The application must set the initial encoding configuration flag EndOfStream of the mfxExtCodingOption structure to OFF to avoid inserting an End of Stream (EOS) marker into the bitstream. An EOS marker causes the bitstream to terminate before encoding is complete.

The application calls the MFXVideoENCODE_Reset function with the new configuration:

If the function successfully set the configuration, the application can continue encoding as usual.

If the new configuration requires a new memory allocation, the function returns MFX_ERR_INCOMPATIBLE_VIDEO_PARAM. The application must close the SDK encoder and reinitialize the encoding procedure with the new configuration.

External Bit Rate Control¶

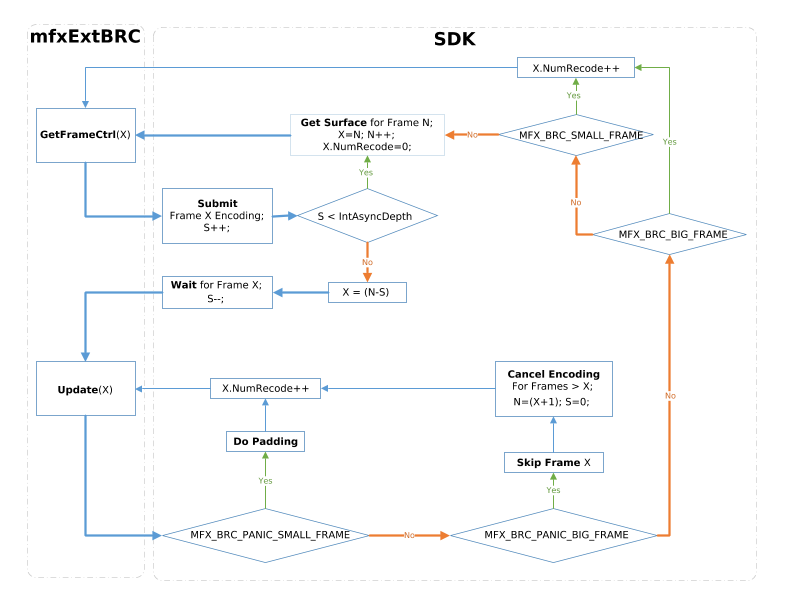

The application can make encoder use external BRC instead of native one. In order to do that it should attach to mfxVideoParam structure mfxExtCodingOption2 with ExtBRC = MFX_CODINGOPTION_ON and callback structure mfxExtBRC during encoder initialization. Callbacks Init, Reset and Close will be invoked inside MFXVideoENCODE_Init, MFXVideoENCODE_Reset and MFXVideoENCODE_Close correspondingly. Figure below illustrates asynchronous encoding flow with external BRC (usage of GetFrameCtrl and Update):

Note

IntAsyncDepth is the SDK max internal asynchronous encoding queue size; it is always less than or equal to mfxVideoParam::AsyncDepth.

External BRC Pseudo Code:

#include "mfxvideo.h"

#include "mfxbrc.h"

typedef struct {

mfxU32 EncodedOrder;

mfxI32 QP;

mfxU32 MaxSize;

mfxU32 MinSize;

mfxU16 Status;

mfxU64 StartTime;

// ... skipped

} MyBrcFrame;

typedef struct {

MyBrcFrame* frame_queue;

mfxU32 frame_queue_size;

mfxU32 frame_queue_max_size;

mfxI32 max_qp[3]; //I,P,B

mfxI32 min_qp[3]; //I,P,B

// ... skipped

} MyBrcContext;

mfxStatus MyBrcInit(mfxHDL pthis, mfxVideoParam* par) {

MyBrcContext* ctx = (MyBrcContext*)pthis;

mfxI32 QpBdOffset;

mfxExtCodingOption2* co2;

if (!pthis || !par)

return MFX_ERR_NULL_PTR;

if (!IsParametersSupported(par))

return MFX_ERR_UNSUPPORTED;

frame_queue_max_size = par->AsyncDepth;

frame_queue = (MyBrcFrame*)malloc(sizeof(MyBrcFrame) * frame_queue_max_size);

if (!frame_queue)

return MFX_ERR_MEMORY_ALLOC;

co2 = (mfxExtCodingOption2*)GetExtBuffer(par->ExtParam, par->NumExtParam, MFX_EXTBUFF_CODING_OPTION2);

QpBdOffset = (par->BitDepthLuma > 8) : (6 * (par->BitDepthLuma - 8)) : 0;

for (<X = I,P,B>) {

ctx->max_qp[X] = (co2 && co2->MaxQPX) ? (co2->MaxQPX - QpBdOffset) : <Default>;

ctx->min_qp[X] = (co2 && co2->MinQPX) ? (co2->MinQPX - QpBdOffset) : <Default>;

}

// skipped initialization of other other BRC parameters

frame_queue_size = 0;

return MFX_ERR_NONE;

}

mfxStatus MyBrcReset(mfxHDL pthis, mfxVideoParam* par) {

MyBrcContext* ctx = (MyBrcContext*)pthis;

if (!pthis || !par)

return MFX_ERR_NULL_PTR;

if (!IsParametersSupported(par))

return MFX_ERR_UNSUPPORTED;

if (!IsResetPossible(ctx, par))

return MFX_ERR_INCOMPATIBLE_VIDEO_PARAM;

// reset here BRC parameters if required

return MFX_ERR_NONE;

}

mfxStatus MyBrcClose(mfxHDL pthis) {

MyBrcContext* ctx = (MyBrcContext*)pthis;

if (!pthis)

return MFX_ERR_NULL_PTR;

if (ctx->frame_queue) {

free(ctx->frame_queue);

ctx->frame_queue = NULL;

ctx->frame_queue_max_size = 0;

ctx->frame_queue_size = 0;

}

return MFX_ERR_NONE;

}

mfxStatus MyBrcGetFrameCtrl(mfxHDL pthis, mfxBRCFrameParam* par, mfxBRCFrameCtrl* ctrl) {

MyBrcContext* ctx = (MyBrcContext*)pthis;

MyBrcFrame* frame = NULL;

mfxU32 cost;

if (!pthis || !par || !ctrl)

return MFX_ERR_NULL_PTR;

if (par->NumRecode > 0)

frame = GetFrame(ctx->frame_queue, ctx->frame_queue_size, par->EncodedOrder);

else if (ctx->frame_queue_size < ctx->frame_queue_max_size)

frame = ctx->frame_queue[ctx->frame_queue_size++];

if (!frame)

return MFX_ERR_UNDEFINED_BEHAVIOR;

if (par->NumRecode == 0) {

frame->EncodedOrder = par->EncodedOrder;

cost = GetFrameCost(par->FrameType, par->PyramidLayer);

frame->MinSize = GetMinSize(ctx, cost);

frame->MaxSize = GetMaxSize(ctx, cost);

frame->QP = GetInitQP(ctx, frame->MinSize, frame->MaxSize, cost); // from QP/size stat

frame->StartTime = GetTime();

}

ctrl->QpY = frame->QP;

return MFX_ERR_NONE;

}

mfxStatus MyBrcUpdate(mfxHDL pthis, mfxBRCFrameParam* par, mfxBRCFrameCtrl* ctrl, mfxBRCFrameStatus* status) {

MyBrcContext* ctx = (MyBrcContext*)pthis;

MyBrcFrame* frame = NULL;

bool panic = false;

if (!pthis || !par || !ctrl || !status)

return MFX_ERR_NULL_PTR;

frame = GetFrame(ctx->frame_queue, ctx->frame_queue_size, par->EncodedOrder);

if (!frame)

return MFX_ERR_UNDEFINED_BEHAVIOR;

// update QP/size stat here

if ( frame->Status == MFX_BRC_PANIC_BIG_FRAME

|| frame->Status == MFX_BRC_PANIC_SMALL_FRAME_FRAME)

panic = true;

if (panic || (par->CodedFrameSize >= frame->MinSize && par->CodedFrameSize <= frame->MaxSize)) {

UpdateBRCState(par->CodedFrameSize, ctx);

RemoveFromQueue(ctx->frame_queue, ctx->frame_queue_size, frame);

ctx->frame_queue_size--;

status->BRCStatus = MFX_BRC_OK;

// Here update Min/MaxSize for all queued frames

return MFX_ERR_NONE;

}

panic = ((GetTime() - frame->StartTime) >= GetMaxFrameEncodingTime(ctx));

if (par->CodedFrameSize > frame->MaxSize) {

if (panic || (frame->QP >= ctx->max_qp[X])) {

frame->Status = MFX_BRC_PANIC_BIG_FRAME;

} else {

frame->Status = MFX_BRC_BIG_FRAME;

frame->QP = <increase QP>;

}

}

if (par->CodedFrameSize < frame->MinSize) {

if (panic || (frame->QP <= ctx->min_qp[X])) {

frame->Status = MFX_BRC_PANIC_SMALL_FRAME;

status->MinFrameSize = frame->MinSize;

} else {

frame->Status = MFX_BRC_SMALL_FRAME;

frame->QP = <decrease QP>;

}

}

status->BRCStatus = frame->Status;

return MFX_ERR_NONE;

}

//initialize encoder

MyBrcContext brc_ctx;

mfxExtBRC ext_brc;

mfxExtCodingOption2 co2;

mfxExtBuffer* ext_buf[2] = {&co2.Header, &ext_brc.Header};

memset(&brc_ctx, 0, sizeof(MyBrcContext));

memset(&ext_brc, 0, sizeof(mfxExtBRC));

memset(&co2, 0, sizeof(mfxExtCodingOption2));

vpar.ExtParam = ext_buf;

vpar.NumExtParam = sizeof(ext_buf) / sizeof(ext_buf[0]);

co2.Header.BufferId = MFX_EXTBUFF_CODING_OPTION2;

co2.Header.BufferSz = sizeof(mfxExtCodingOption2);

co2.ExtBRC = MFX_CODINGOPTION_ON;

ext_brc.Header.BufferId = MFX_EXTBUFF_BRC;

ext_brc.Header.BufferSz = sizeof(mfxExtBRC);

ext_brc.pthis = &brc_ctx;

ext_brc.Init = MyBrcInit;

ext_brc.Reset = MyBrcReset;

ext_brc.Close = MyBrcClose;

ext_brc.GetFrameCtrl = MyBrcGetFrameCtrl;

ext_brc.Update = MyBrcUpdate;

status = MFXVideoENCODE_Query(session, &vpar, &vpar);

if (status == MFX_ERR_UNSUPPOERTED || co2.ExtBRC != MFX_CODINGOPTION_ON)

// unsupported case

else

status = MFXVideoENCODE_Init(session, &vpar);

JPEG¶

The application can use the same encoding procedures for JPEG/motion JPEG encoding, as illustrated by the pseudo code:

// encoder initialization

MFXVideoENCODE_Init (...);

// single frame/picture encoding

MFXVideoENCODE_EncodeFrameAsync (...);

MFXVideoCORE_SyncOperation(...);

// close down

MFXVideoENCODE_Close(...);

ENCODE supports JPEG baseline profile encoding as follows:

DCT-based process

Source image: 8-bit samples within each component

Sequential

Huffman coding: 2 AC and 2 DC tables

3 loadable quantization matrixes

Interleaved and non-interleaved scans

Single and multiple scans

chroma subsampling ratios:

Chroma 4:0:0 (grey image)

Chroma 4:1:1

Chroma 4:2:0

Chroma horizontal 4:2:2

Chroma vertical 4:2:2

Chroma 4:4:4

3 channels images

The application may specify Huffman and quantization tables during encoder initialization by attaching mfxExtJPEGQuantTables and

mfxExtJPEGHuffmanTables buffers to mfxVideoParam structure. If the application does not define tables then the SDK

encoder uses tables recommended in ITU-T* Recommendation T.81. If the application does not define quantization table it has to specify Quality

parameter in mfxInfoMFX structure. In this case, the SDK encoder scales default quantization table according to specified Quality

parameter.

The application should properly configured chroma sampling format and color format. FourCC and ChromaFormat fields in mfxFrameInfo

structure are used for this. For example, to encode 4:2:2 vertically sampled YCbCr picture, the application should set FourCC to

MFX_FOURCC_YUY2 and ChromaFormat to MFX_CHROMAFORMAT_YUV422V. To encode 4:4:4 sampled RGB picture, the application

should set FourCC to MFX_FOURCC_RGB4 and ChromaFormat to MFX_CHROMAFORMAT_YUV444.

The SDK encoder supports different sets of chroma sampling and color formats on different platforms. The application has to call

MFXVideoENCODE_Query() function to check if required color format is supported on given platform and then initialize encoder with

proper values of FourCC and ChromaFormat in mfxFrameInfo structure.

The application should not define number of scans and number of components. They are derived by the SDK encoder from Interleaved flag in

mfxInfoMFX structure and from chroma type. If interleaved coding is specified then one scan is encoded that contains all image

components. Otherwise, number of scans is equal to number of components. The SDK encoder uses next component IDs - “1” for luma (Y),

“2” for chroma Cb (U) and “3” for chroma Cr (V).

The application should allocate big enough buffer to hold encoded picture. Roughly, its upper limit may be calculated using next equation:

BufferSizeInKB = 4 + (Width * Height * BytesPerPx + 1023) / 1024;

where Width and Height are weight and height of the picture in pixel, BytesPerPx is number of byte for one pixel. It equals to 1 for monochrome picture, 1.5 for NV12 and YV12 color formats, 2 for YUY2 color format, and 3 for RGB32 color format (alpha channel is not encoded).

Multi-view video encoding¶

Similar to the decoding and video processing initialization procedures, the application attaches the mfxExtMVCSeqDesc structure to the mfxVideoParam structure for encoding initialization. The mfxExtMVCSeqDesc structure configures the SDK MVC encoder to work in three modes:

Default dependency mode: the application specifies NumView` and all other fields zero. The SDK encoder creates a single operation point with all views (view identifier 0 : NumView-1) as target views. The first view (view identifier 0) is the base view. Other views depend on the base view.

Explicit dependency mode: the application specifies NumView and the View dependency array, and sets all other fields to zero. The SDK encoder creates a single operation point with all views (view identifier View[0 : NumView-1].ViewId) as target views. The first view (view identifier View[0].ViewId) is the base view. The view dependencies follow the View dependency structures.

Complete mode: the application fully specifies the views and their dependencies. The SDK encoder generates a bitstream with corresponding stream structures.

The SDK MVC encoder does not support importing sequence and picture headers via the mfxExtCodingOptionSPSPPS structure, or configuring reference frame list via the mfxExtRefListCtrl structure.

During encoding, the SDK encoding function MFXVideoENCODE_EncodeFrameAsync accumulates input frames until encoding of a picture is possible. The function returns MFX_ERR_MORE_DATA for more data at input or MFX_ERR_NONE if having successfully accumulated enough data for encoding of a picture. The generated bitstream contains the complete picture (multiple views). The application can change this behavior and instruct encoder to output each view in a separate bitstream buffer. To do so the application has to turn on the ViewOutput flag in the mfxExtCodingOption structure. In this case, encoder returns MFX_ERR_MORE_BITSTREAM if it needs more bitstream buffers at output and MFX_ERR_NONE when processing of picture (multiple views) has been finished. It is recommended that the application provides a new input frame each time the SDK encoder requests new bitstream buffer. The application must submit views data for encoding in the order they are described in the mfxExtMVCSeqDesc structure. Particular view data can be submitted for encoding only when all views that it depends upon have already been submitted.

The following pseudo code shows the encoding procedure pseudo code.

mfxExtBuffer *eb;

mfxExtMVCSeqDesc seq_desc;

mfxVideoParam init_param;

init_param.ExtParam=&eb;

init_param.NumExtParam=1;

eb=&seq_desc;

/* init encoder */

MFXVideoENCODE_Init(session, &init_param);

/* perform encoding */

for (;;) {

MFXVideoENCODE_EncodeFrameAsync(session, NULL, surface2, bits,

&syncp);

MFXVideoCORE_SyncOperation(session,syncp,INFINITE);

}

/* close encoder */

MFXVideoENCODE_Close();

Video Processing Procedures¶

Example below shows the pseudo code of the video processing procedure.

MFXVideoVPP_QueryIOSurf(session, &init_param, response);

allocate_pool_of_surfaces(in_pool, response[0].NumFrameSuggested);

allocate_pool_of_surfaces(out_pool, response[1].NumFrameSuggested);

MFXVideoVPP_Init(session, &init_param);

in=find_unlocked_surface_and_fill_content(in_pool);

out=find_unlocked_surface_from_the_pool(out_pool);

for (;;) {

sts=MFXVideoVPP_RunFrameVPPAsync(session,in,out,aux,&syncp);

if (sts==MFX_ERR_MORE_SURFACE || sts==MFX_ERR_NONE) {

MFXVideoCore_SyncOperation(session,syncp,INFINITE);

process_output_frame(out);

out=find_unlocked_surface_from_the_pool(out_pool);

}

if (sts==MFX_ERR_MORE_DATA && in==NULL)

break;

if (sts==MFX_ERR_NONE || sts==MFX_ERR_MORE_DATA) {

in=find_unlocked_surface(in_pool);

fill_content_for_video_processing(in);

if (end_of_input_sequence())

in=NULL;

}

}

MFXVideoVPP_Close(session);

free_pool_of_surfaces(in_pool);

free_pool_of_surfaces(out_pool);

The following describes a few key points:

The application uses the MFXVideoVPP_QueryIOSurf function to obtain the number of frame surfaces needed for input and output. The application must allocate two frame surface pools, one for the input and the other for the output.

The video processing function MFXVideoVPP_RunFrameVPPAsync is asynchronous. The application must synchronize to make the output result ready, through the MFXVideoCORE_SyncOperation function.

The body of the video processing procedures covers three scenarios as follows:

If the number of frames consumed at input is equal to the number of frames generated at output, VPP returns MFX_ERR_NONE when an output is ready. The application must process the output frame after synchronization, as the MFXVideoVPP_RunFrameVPPAsync function is asynchronous. At the end of a sequence, the application must provide a NULL input to drain any remaining frames.

If the number of frames consumed at input is more than the number of frames generated at output, VPP returns MFX_ERR_MORE_DATA for additional input until an output is ready. When the output is ready, VPP returns MFX_ERR_NONE. The application must process the output frame after synchronization and provide a NULL input at the end of sequence to drain any remaining frames.

If the number of frames consumed at input is less than the number of frames generated at output, VPP returns either MFX_ERR_MORE_SURFACE (when more than one output is ready), or MFX_ERR_NONE (when one output is ready and VPP expects new input). In both cases, the application must process the output frame after synchronization and provide a NULL input at the end of sequence to drain any remaining frames.

Configuration¶

The SDK configures the video processing pipeline operation based on the difference between the input and output formats, specified in the mfxVideoParam structure. A few examples follow:

When the input color format is YUY2 and the output color format is NV12, the SDK enables color conversion from YUY2 to NV12.

When the input is interleaved and the output is progressive, the SDK enables de-interlacing.

When the input is single field and the output is interlaced or progressive, the SDK enables field weaving, optionally with deinterlacing.

When the input is interlaced and the output is single field, the SDK enables field splitting.

In addition to specifying the input and output formats, the application can provide hints to fine-tune the video processing pipeline operation. The application can disable filters in pipeline by using mfxExtVPPDoNotUse structure; enable them by using mfxExtVPPDoUse structure and configure them by using dedicated configuration structures. See Table 4 for complete list of configurable video processing filters, their IDs and configuration structures. See the ExtendedBufferID enumerator for more details.

The SDK ensures that all filters necessary to convert input format to output one are included in pipeline. However, the SDK can skip some optional filters even if they are explicitly requested by the application, for example, due to limitation of underlying hardware. To notify application about this skip, the SDK returns warning MFX_WRN_FILTER_SKIPPED. The application can retrieve list of active filters by attaching mfxExtVPPDoUse structure to mfxVideoParam structure and calling MFXVideoVPP_GetVideoParam function. The application must allocate enough memory for filter list.

Configurable VPP filters:

Filter ID |

Configuration structure |

|---|---|

MFX_EXTBUFF_VPP_DENOISE |

mfxExtVPPDenoise |

MFX_EXTBUFF_VPP_MCTF |

mfxExtVppMctf |

MFX_EXTBUFF_VPP_DETAIL |

mfxExtVPPDetail |

MFX_EXTBUFF_VPP_FRAME_RATE_CONVERSION |

mfxExtVPPFrameRateConversion |

MFX_EXTBUFF_VPP_IMAGE_STABILIZATION |

mfxExtVPPImageStab |

MFX_EXTBUFF_VPP_PICSTRUCT_DETECTION |

none |

MFX_EXTBUFF_VPP_PROCAMP |

mfxExtVPPProcAmp |

MFX_EXTBUFF_VPP_FIELD_PROCESSING |

mfxExtVPPFieldProcessing |

Example of Video Processing configuration:

/* enable image stabilization filter with default settings */

mfxExtVPPDoUse du;

mfxU32 al=MFX_EXTBUFF_VPP_IMAGE_STABILIZATION;

du.Header.BufferId=MFX_EXTBUFF_VPP_DOUSE;

du.Header.BufferSz=sizeof(mfxExtVPPDoUse);

du.NumAlg=1;

du.AlgList=&al;

/* configure the mfxVideoParam structure */

mfxVideoParam conf;

mfxExtBuffer *eb=&du;

memset(&conf,0,sizeof(conf));

conf.IOPattern=MFX_IOPATTERN_IN_SYSTEM_MEMORY | MFX_IOPATTERN_OUT_SYSTEM_MEMORY;

conf.NumExtParam=1;

conf.ExtParam=&eb;

conf.vpp.In.FourCC=MFX_FOURCC_YV12;

conf.vpp.Out.FourCC=MFX_FOURCC_NV12;

conf.vpp.In.Width=conf.vpp.Out.Width=1920;

conf.vpp.In.Height=conf.vpp.Out.Height=1088;

/* video processing initialization */

MFXVideoVPP_Init(session, &conf);

Region of Interest¶

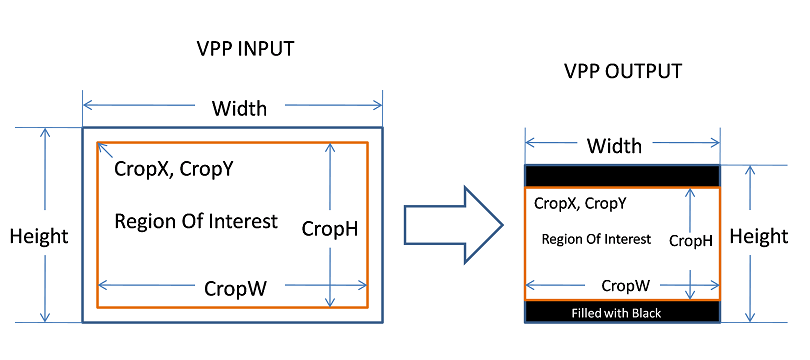

During video processing operations, the application can specify a region of interest for each frame, as illustrated below:

VPP Region of Interest Operation:

Specifying a region of interest guides the resizing function to achieve special effects such as resizing from 16:9 to 4:3 while keeping the aspect ratio intact. Use the CropX, CropY, CropW and CropH parameters in the mfxVideoParam structure to specify a region of interest.

Examples of VPP Operations on Region of Interest:

Operation |

VPP Input Width/Height |

VPP Input CropX, CropY, CropW, CropH |

VPP Output Width/Height |

VPP Output CropX, CropY, CropW, CropH |

|---|---|---|---|---|

Cropping |

720x480 |

16,16,688,448 |

720x480 |

16,16,688,448 |

Resizing |

720x480 |

0,0,720,480 |

1440x960 |

0,0,1440,960 |

Horizontal stretching |

720x480 |

0,0,720,480 |

640x480 |

0,0,640,480 |

16:9 4:3 with letter boxing at the top and bottom |

1920x1088 |

0,0,1920,1088 |

720x480 |

0,36,720,408 |

4:3 16:9 with pillar boxing at the left and right |

720x480 |

0,0,720,480 |

1920x1088 |

144,0,1632,1088 |

Multi-view video processing¶

The SDK video processing supports processing multiple views. For video processing initialization, the application needs to attach the mfxExtMVCSeqDesc structure to the mfxVideoParam structure and call the MFXVideoVPP_Init function. The function saves the view identifiers. During video processing, the SDK processes each view independently, one view at a time. The SDK refers to the FrameID field of the mfxFrameInfo structure to configure each view according to its processing pipeline. The application needs to fill the the FrameID field before calling the MFXVideoVPP_RunFrameVPPAsync function, if the video processing source frame is not the output from the SDK MVC decoder. The following pseudo code illustrates it:

mfxExtBuffer *eb;

mfxExtMVCSeqDesc seq_desc;

mfxVideoParam init_param;

init_param.ExtParam = &eb;

init_param.NumExtParam=1;

eb=&seq_desc;

/* init VPP */

MFXVideoVPP_Init(session, &init_param);

/* perform processing */

for (;;) {

MFXVideoVPP_RunFrameVPPAsync(session,in,out,aux,&syncp);

MFXVideoCORE_SyncOperation(session,syncp,INFINITE);

}

/* close VPP */

MFXVideoVPP_Close(session);

Transcoding Procedures¶

The application can use the SDK encoding, decoding and video processing functions together for transcoding operations. This section describes the key aspects of connecting two or more SDK functions together.

Asynchronous Pipeline¶